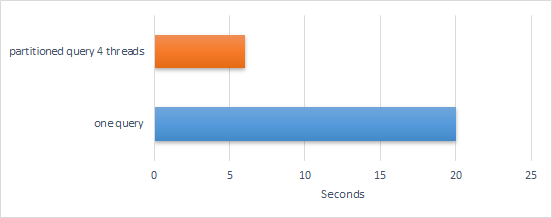

Manually segmenting a query into pieces you can execute in parallel can give a good performance boost. Since Firebird 4.0 you can have shared transaction snapshots so your results can even be correct.

Your query needs to have segments you can know beforehand or can cheaply compute. In my example it is sales per month where you can easily segment per month.

Performance

Schema

create table sale

(

sale_id integer GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,

y integer,

m integer,

d integer,

qty decimal(18,2)

);

CREATE INDEX idx_m_y ON sale (y,m);Code C# .Net

var topt = new FbTransactionOptions();

topt.TransactionBehavior = FbTransactionBehavior.Concurrency;

var GetReport_onequery = (FbConnection con) =>

{

var tra = con.BeginTransaction(topt);

var cmd = con.CreateCommand();

cmd.CommandText = "select y,m,sum(qty) FROM sale GROUP BY y,m";

cmd.Transaction = tra;

cmd.Prepare();

var reader = cmd.ExecuteReader();

var result = new List<sales_per_month>();

while (reader.Read())

{

var sr = new sales_per_month();

sr.y = reader.GetInt32(0);

sr.m = reader.GetInt32(1);

sr.sum_qty = reader.GetDecimal(2);

result.Add(sr);

}

tra.Commit();

return result;

};

var GetReport_batch = (List<sales_per_month> batch, FbConnection con) =>

{

var tra = con.BeginTransaction(topt);

var cmd = con.CreateCommand();

cmd.CommandText = "select sum(qty) FROM sale WHERE y = @y AND m = @m";

cmd.Transaction = tra;

var p_y = cmd.Parameters.AddWithValue("y", (int)0);

var p_m = cmd.Parameters.AddWithValue("m", (int)0);

cmd.Prepare();

var result = new List<sales_per_month>();

foreach (var row in batch)

{

p_y.Value = row.y;

p_m.Value = row.m;

var sr = new sales_per_month();

sr.y = row.y;

sr.m = row.m;

sr.sum_qty = (decimal)cmd.ExecuteScalar();

}

tra.Commit();

return result;

};

GetReport_onequery(c);

var paraopts = new ParallelOptions();

paraopts.MaxDegreeOfParallelism = 4;

var report_pieces = Utils.DivideIntoBatches(sumresults, 3);

var tra_shared = c.BeginTransaction(topt);

var txInfo = new FbTransactionInfo(tra_shared);

topt.SnapshotAtNumber = txInfo.GetTransactionSnapshotNumber();

Parallel.ForEach(report_pieces, parallelOptions: paraopts, (piece) =>

{

using var c2 = new FbConnection(cb.ToString());

c2.Open();

GetReport_batch(piece, c2);

});

tra_shared.Commit();

//utils.cs

public static List<List<T>> DivideIntoBatches<T>(List<T> data,int batchsize)

{

var result = new List<List<T>>();

int start_offset = 0;

int total_count = data.Count;

while (start_offset < total_count)

{

var blen = Math.Min(batchsize, total_count - start_offset);

result.Add(data.GetRange(start_offset, blen));

start_offset += blen;

}

return result;

}