Firebird 4.0 includes new batch api and the .net driver recently added support for this.

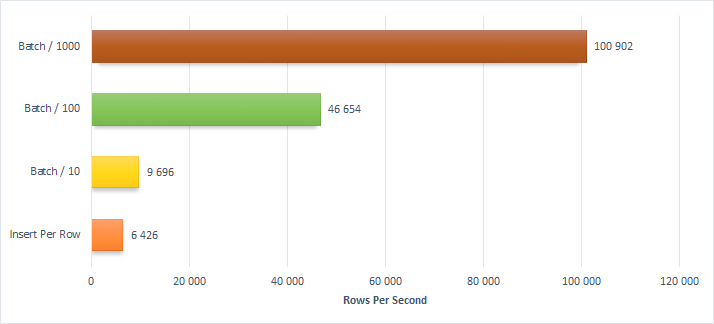

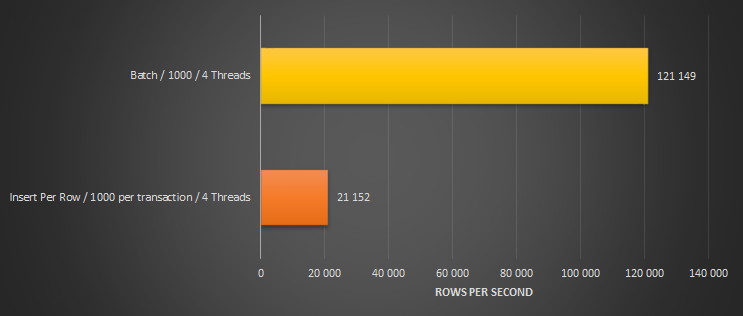

The increased performance for bulk loading is quite incredible.

Some things to keep in mind when using batching api is batch size limits and error handling which is little more complex. Some details here – https://github.com/FirebirdSQL/NETProvider/blob/master/docs/batching.md

Performance

The exact numbers are specific to my machine, code,etc. but the relative performance should be true.

Benchmark Code

Schema:

create table sale

(

sale_id integer GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,

y integer,

m integer,

d integer,

qty decimal(18,2)

);

CREATE INDEX idx_m_y ON sale (y,m);Extract from the benchmark code showing the important bits:

var topt = new FbTransactionOptions();

topt.TransactionBehavior = FbTransactionBehavior.Concurrency;

var ProcessSequentiallyInBatch = (List<sale> batch,FbConnection con) =>

{

var tra = con.BeginTransaction(topt);

using var InsertBatch = new FbBatchCommand("INSERT INTO sale (y,m,d,qty) VALUES (@y,@m,@d,@qty)", con, tra);

InsertBatch.Prepare();

foreach (var row in batch)

{

var pbatch1 = InsertBatch.AddBatchParameters();

pbatch1.AddWithValue("y", row.y);

pbatch1.AddWithValue("m", row.m);

pbatch1.AddWithValue("d", row.d);

pbatch1.AddWithValue("qty", row.qty);

}

InsertBatch.ExecuteNonQuery();

tra.Commit();

};

var ProcessSequentiallyIndivid = (List<sale> batch, FbConnection con) =>

{

var tra = con.BeginTransaction(topt);

var cmd = con.CreateCommand();

cmd.CommandText = "INSERT INTO sale (y,m,d,qty) VALUES (@y,@m,@d,@qty)";

cmd.Transaction = tra;

var p_y = cmd.Parameters.AddWithValue("y",(int) 0);

var p_m = cmd.Parameters.AddWithValue("m", (int)0);

var p_d = cmd.Parameters.AddWithValue("d", (int)0);

var p_qty = cmd.Parameters.AddWithValue("qty", (decimal)0);

cmd.Prepare();

foreach (var row in batch)

{

p_y.Value = row.y;

p_m.Value = row.m;

p_d.Value = row.d;

p_qty.Value = row.qty;

cmd.ExecuteNonQuery();

}

tra.Commit();

};

var pieces = Utils.DivideIntoBatches(data, 1000);

foreach (var piece in pieces)

{

//ProcessSequentiallyIndivid(piece, c);

//ProcessSequentiallyInBatch(piece, c);

}

var paraopts = new ParallelOptions();

paraopts.MaxDegreeOfParallelism = 4;

Parallel.ForEach(pieces, parallelOptions: paraopts, (piece) =>

{

using var c2 = new FbConnection(cb.ToString());

c2.Open();

//ProcessSequentiallyIndivid(piece, c2);

//ProcessSequentiallyInBatch(piece, c2);

});

//utils

public static List<List<T>> DivideIntoBatches<T>(List<T> data,int batchsize)

{

var result = new List<List<T>>();

int start_offset = 0;

int total_count = data.Count;

while (start_offset < total_count)

{

var blen = Math.Min(batchsize, total_count - start_offset);

result.Add(data.GetRange(start_offset, blen));

start_offset += blen;

}

return result;

}